Building AI Muscle

Are there any tech startups which don't care about AI these days? At IETech, AI muscle is a key strategic component of Knowledge Exchange products we are working on. These days, any company of any size and maturity can easily incorporate AI into their product lineup and these companies don't even have to be traditional technology companies. This has been made possible by cloud platforms such as AWS, AZURE, and the like. About 9 months ago at IETech made a strategic decision to invest and build an in-house general purpose AI muscle machine. Although AWS and AZURE do provide everything we might need, it is impractical and expensive to train custom AI models.

When training custom models one has to invest computing resources in the design of the AI architecture which includes the neural network layout and all the hyper-parameters. In traditional software engineering you have the luxury to design the architecture upfront, or be able to work on parts of the architecture in isolation. Training a neural network is nothing like that. Although an AI model is comprised of many parts, these parts are non-autonomous, mean nothing without the context of the other.

Each part of neural network has a set of parameters that can take arbitrary values. Think of them as knobs on those old fashioned radios. Suppose you have such a radio at home and you set it to your favourite station. That exact setting might not work when you take your radio camping next weekend. As a matter a fact, your radio might not be able to be tuned to that station at the campground at all. The real problem is that you don't know that ahead of time! The only way to find out is to actually travel to your camping spot and find out then and there. Such is the nature of designing and tuning a custom AI model, or neural network.

Using the cloud to train a neural network becomes cumbersome and really really expensive. Now, if you are not training a custom AI model but instead you want to integrate an already trained AI models, that is not core to your business offering, such as speech-to-text, etc. then the cloud offering might be your most efficient way to get that AI. Lets say your company has a phone app that helps people find best mortgage rates. Your company now wants a competitive advantage by exceeding the new Accessibility Standards. Integrating speech-to-text might just be your ticket. It would not make sense for your financial advisory company to build an in house expertise of this highly specialized knowledge. Conclusion; cloud is awesome for getting some off the shelf AI, but not if you are working from scratch.

IETech's core business is custom text mining and usage patterns of knowledge & licenses. Thus the in-house AI muscle is indispensable. The machine we build was just under $8K and is capable of training several models at a time! We still use the cloud when the client needs call for it, but we are much more cost effective on the cloud because of our in-house computing muscle. It has been few months and we are already seeing how much more effective we can turn around projects for clients. We just begun! We are just exploring NVLink, a direct communication between the GPUs that increase the speed of training by minimising the data transfers during training.

Call us at to engineer the future of your digital assets.

647-465-8110

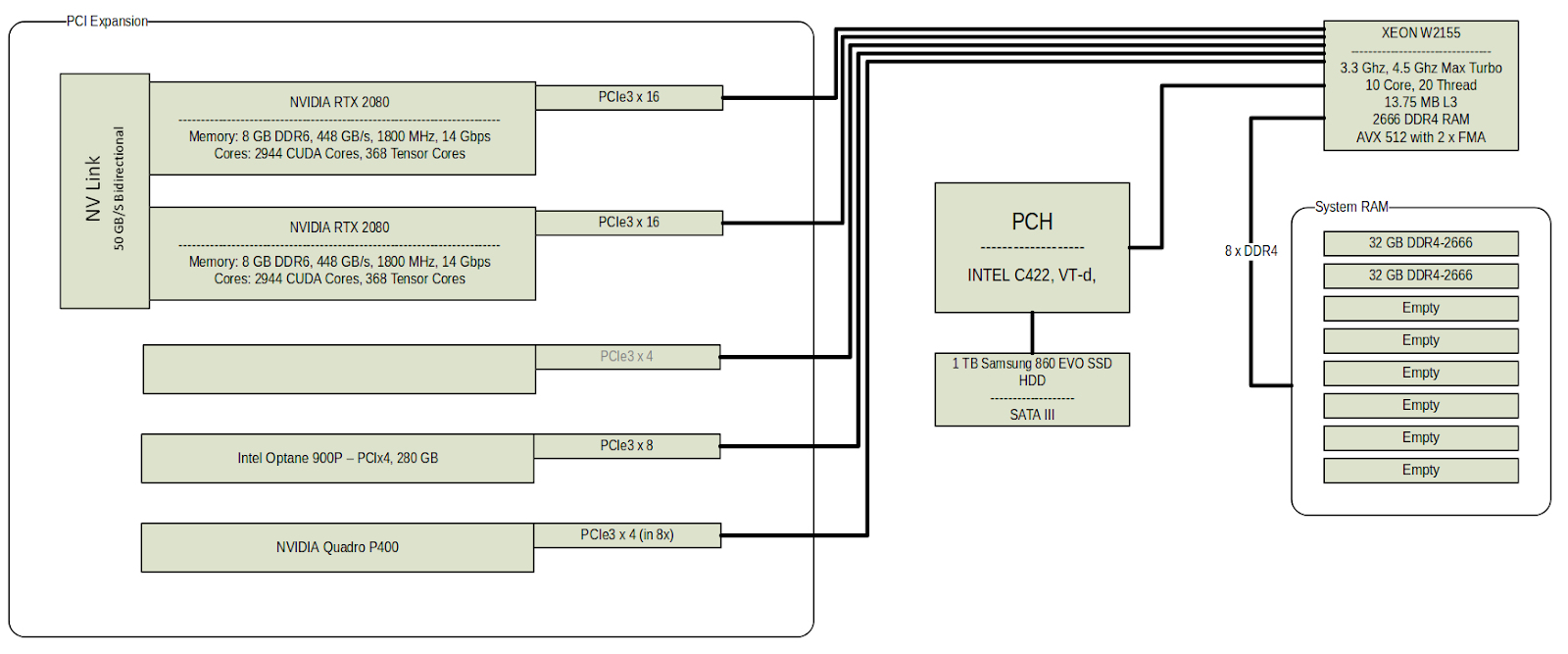

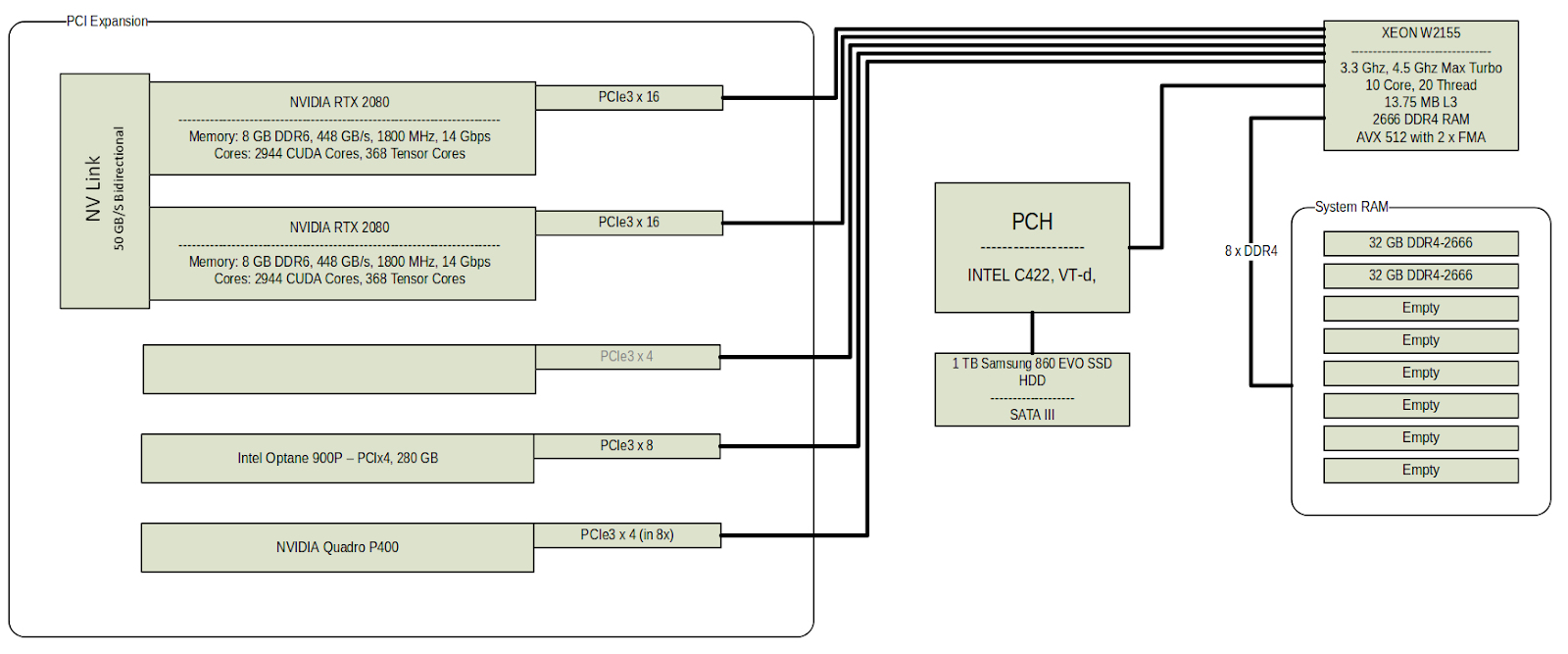

Here are the details of our setup:

HARDWARE:

HARDWARE:

An AI muscle machine will be used to train neural networks using large sets of data. In addition, it is commonly required to transform many gigabytes of data into new files / structures. These tasks can take many days or weeks to complete. As a result, increasing performance by even 10% overall will result in many hours or days in reduced processing time.

Long running processes could be interrupted by errors that happen at the hardware level, causing corruption of data and lost work / time. Server and professional workstation grade hardware provide mechanisms to capture and correct these hardware errors that ensure system stability (Error Control Correction or ECC memory). The Xeon W2155 processor was selected for its ability to use large amounts ECC memory, and includes important advanced math libraries.

The Xeon W 2155 processor includes 10 cores / 20 threads and can run at 3.3 Ghz on all cores while using very demanding mathematical instruction sets (AVX 512). While most consumer level processors don't have the ability to run these instruction sets at all, and servers are often limited to a single AVX-512 extension per core, this processor can run 2 x AVX 512 libraries on each of it's 10 cores (or a dedicated AVX-512 instruction set for each of it's 20 threads). You can see the performance specifications from Anandtech.

Graphical Processing Units are a critical part of any AI Muscle. This machine uses two RTX 2080 GPUs connected together using NVLink. Much research went into evaluating the options for our GPU purchase. Ultimately, the new Geforce RTX 2080s include the ability to process mixed precision utilizing dedicated tensor cores previously only found in professional or enterprise grade products (for a deep dive into the value of tensors in GPU programming, see the previous article on TensorFlow).

The enterprise grade products have some major advantages around power efficiency and handling of virtual environments. Since we are not running a bare metal hypervisor, we chose the consumer level RTX 2080 as having the most bang for our buck in processing power. The ability to connect these two GPUs together with NVLink also offers greater flexibility; allowing for running multiple training models simultaneously, or (eventually when the coding libraries are more sophisticated), enabling running a single training model using both GPUs and utilizing the high speed communication of 50 GB/S directly between GPU cards (rather than over much slower PCIe 3.0 rate of approximately 16 GB/s on a 16 lane connection).

To avoid latency and bottlenecks associated with data retrieval and storage, this system has installed an Intel Optane 900P hard drive in the form of an Add-In-Board (read: connected to the systems PCI slot). This allows the drive to avoid the Platform Controller Hub (PCH) usually responsible for controlling traffic and coordinating the usage of the input / output resources for peripheral devices and hard drives. In addition, the Intel Optane drives utilize what they have branded as XPoint technology and provides direct memory writing - enabling greater efficiency compared to the standard block writing found on other solid state hard drives.

The speed of our processor and GPUs can only be leveraged if you can get the information to them quickly. This AI muscle machine is designed to purpose and far outperforms a standard desktop.

We can build your AI muscle machine. Contact us at IETech.ca.

When training custom models one has to invest computing resources in the design of the AI architecture which includes the neural network layout and all the hyper-parameters. In traditional software engineering you have the luxury to design the architecture upfront, or be able to work on parts of the architecture in isolation. Training a neural network is nothing like that. Although an AI model is comprised of many parts, these parts are non-autonomous, mean nothing without the context of the other.

Each part of neural network has a set of parameters that can take arbitrary values. Think of them as knobs on those old fashioned radios. Suppose you have such a radio at home and you set it to your favourite station. That exact setting might not work when you take your radio camping next weekend. As a matter a fact, your radio might not be able to be tuned to that station at the campground at all. The real problem is that you don't know that ahead of time! The only way to find out is to actually travel to your camping spot and find out then and there. Such is the nature of designing and tuning a custom AI model, or neural network.

Using the cloud to train a neural network becomes cumbersome and really really expensive. Now, if you are not training a custom AI model but instead you want to integrate an already trained AI models, that is not core to your business offering, such as speech-to-text, etc. then the cloud offering might be your most efficient way to get that AI. Lets say your company has a phone app that helps people find best mortgage rates. Your company now wants a competitive advantage by exceeding the new Accessibility Standards. Integrating speech-to-text might just be your ticket. It would not make sense for your financial advisory company to build an in house expertise of this highly specialized knowledge. Conclusion; cloud is awesome for getting some off the shelf AI, but not if you are working from scratch.

IETech's core business is custom text mining and usage patterns of knowledge & licenses. Thus the in-house AI muscle is indispensable. The machine we build was just under $8K and is capable of training several models at a time! We still use the cloud when the client needs call for it, but we are much more cost effective on the cloud because of our in-house computing muscle. It has been few months and we are already seeing how much more effective we can turn around projects for clients. We just begun! We are just exploring NVLink, a direct communication between the GPUs that increase the speed of training by minimising the data transfers during training.

Call us at to engineer the future of your digital assets.

647-465-8110

Here are the details of our setup:

HARDWARE:

HARDWARE:An AI muscle machine will be used to train neural networks using large sets of data. In addition, it is commonly required to transform many gigabytes of data into new files / structures. These tasks can take many days or weeks to complete. As a result, increasing performance by even 10% overall will result in many hours or days in reduced processing time.

Long running processes could be interrupted by errors that happen at the hardware level, causing corruption of data and lost work / time. Server and professional workstation grade hardware provide mechanisms to capture and correct these hardware errors that ensure system stability (Error Control Correction or ECC memory). The Xeon W2155 processor was selected for its ability to use large amounts ECC memory, and includes important advanced math libraries.

The Xeon W 2155 processor includes 10 cores / 20 threads and can run at 3.3 Ghz on all cores while using very demanding mathematical instruction sets (AVX 512). While most consumer level processors don't have the ability to run these instruction sets at all, and servers are often limited to a single AVX-512 extension per core, this processor can run 2 x AVX 512 libraries on each of it's 10 cores (or a dedicated AVX-512 instruction set for each of it's 20 threads). You can see the performance specifications from Anandtech.

Graphical Processing Units are a critical part of any AI Muscle. This machine uses two RTX 2080 GPUs connected together using NVLink. Much research went into evaluating the options for our GPU purchase. Ultimately, the new Geforce RTX 2080s include the ability to process mixed precision utilizing dedicated tensor cores previously only found in professional or enterprise grade products (for a deep dive into the value of tensors in GPU programming, see the previous article on TensorFlow).

The enterprise grade products have some major advantages around power efficiency and handling of virtual environments. Since we are not running a bare metal hypervisor, we chose the consumer level RTX 2080 as having the most bang for our buck in processing power. The ability to connect these two GPUs together with NVLink also offers greater flexibility; allowing for running multiple training models simultaneously, or (eventually when the coding libraries are more sophisticated), enabling running a single training model using both GPUs and utilizing the high speed communication of 50 GB/S directly between GPU cards (rather than over much slower PCIe 3.0 rate of approximately 16 GB/s on a 16 lane connection).

To avoid latency and bottlenecks associated with data retrieval and storage, this system has installed an Intel Optane 900P hard drive in the form of an Add-In-Board (read: connected to the systems PCI slot). This allows the drive to avoid the Platform Controller Hub (PCH) usually responsible for controlling traffic and coordinating the usage of the input / output resources for peripheral devices and hard drives. In addition, the Intel Optane drives utilize what they have branded as XPoint technology and provides direct memory writing - enabling greater efficiency compared to the standard block writing found on other solid state hard drives.

The speed of our processor and GPUs can only be leveraged if you can get the information to them quickly. This AI muscle machine is designed to purpose and far outperforms a standard desktop.

We can build your AI muscle machine. Contact us at IETech.ca.

Comments

Post a Comment